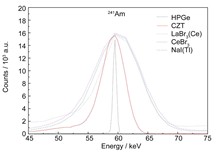

To understand the nuclear spectroscopic performance of gamma-ray spectrum detectors in different application scenarios, HPGe, CZT, LaBr3(Ce), CeBr3, and NaI(Tl), which are common gamma-ray spectrum detectors, were selected. 241Am, 137Cs, and 60Co standard sources were measured, and the detection limits of the different detectors for different nuclides were calculated. The results show that under environmental background conditions, the detection limits of the HPGe, CZT, LaBr3(Ce), CeBr3, and NaI(Tl) gamma spectrum detectors for low-energy gamma-ray 241Am are 0.039 Bq, 0.180 Bq, 0.781 Bq, 0.697 Bq, and 1.104 Bq, respectively. However, when measuring the activity of 137Cs in the lead chamber, the detection limits are 0.005 Bq, 0.294 Bq, 0.036 Bq, 0.037 Bq, and 0.057 Bq, respectively. The measurement and calculation results show the full-energy peak shape, source peak detection efficiency, background energy spectrum, and the detection limits of the different detectors in different application scenarios, thus providing reference nuclear spectroscopic performance parameters for selecting the appropriate gamma spectrum detectors to measure sample activity.

Bsaed on the requirements of "Standard examination methods for drinking water — Part 13: Radiological indices" (GB/T 5750.13—2023) as well as domestic and foreign uncertainty assessment guidelines, this paper presents the uncertainty evaluation of gross α and gross β analyses in actual water samples. By analyzing the uncertainty sources, combining various uncertainty components, and calculating the expanded uncertainty, the contribution of each uncertainty component to the total uncertainty was discussed. The analysis results for the water sample are gross α: (1.01±0.14) Bq/L (k=2), gross β: (0.60±0.09) Bq/L (k=2). The uncertainty sources mainly originated from the net counting rate, counting efficiency, and chemical recovery, which account for 52.3%,19.8%,and 20.0% in gross α , respectively, and 59.9%, 19.0%, and 13.6% in gross β, respectively.

Electron beam irradiation processing has become an important component of the nuclear technology industry, with low-energy electron beam increasingly being applied in processes such as coating curing, wastewater treatment, and food preservation. Accurate measurement of irradiation parameters is essential to ensure irradiation quality. However, at present, standardized protocols for electron beam dosimetry below 300 keV have not been established. As a result, parameter measurements are often benchmarked against the 10 MeV electron linear accelerator, which introduces systematic biases due to inconsistencies among measured objects. In this study, a novel method for measuring low-energy electron beam energy, combining experimental testing and mathematical simulations, was applied to electron beams with energies below 300 keV. Additionally, an absorbed dose measurement device based on calorimetry was developed. Relationships between absorbed dose and beam intensity, displacement velocity, and other parameters were explored to obtain absolute measurements of absorbed doses from a low-energy electron beam. The energy parameters of low-energy electrons were experimentally determined using a dose step-stacking method, combined with simulations of energy deposition depth distribution curve, then the low-energy electron absorbed dose parameters were measured in the range of 140 keV. The low-energy electron absorbed dose parameters were measured using calorimetry in the range 1-120 kGy, and measurement uncertainty was 11% (k?=2). This study provides a reliable measurement method for low-energy electron-beam irradiation processing.

This study uses the MATLAB App Designer software based on the principles of point kernel integration and the Monte Carlo method to design an interface program for the cobalt source layout effect of gamma irradiation equipment. The interface program can simulate the manual and automatic layout calculation processes of cobalt sources, display real-time changes in the cobalt source position of the operation module and the entire source intensity effect map, and rapidly calculate the surface dose distribution of the source rack and the spatial dose distribution map of the product. Under the expected parameter conditions, the interface program can automatically calculate and select the optimal source discharge scheme by importing information such as the cobalt source activity and position. The layout scheme exported by the interface program can be used to execute the GEANT4 Monte Carlo program directly, thereby verifying the accuracy of the layout scheme. By comparing the concentration and dispersion of different cobalt source activities, the results show that the interface program agrees well with the GEANT4 calculations. Adding cobalt sources or adjusting the layout plan is important for selecting the optimal plan, improving the utilization rate of cobalt sources, and increasing the production capacity of irradiation equipment.

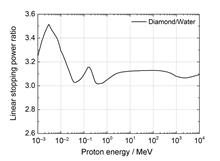

Diamond is considered a promising detector in radiobiological studies. However, the difference in densities between diamond and tissue imply that their energy deposition spectra are not identical, even for diamond and tissues of the same size. The energy deposition spectrum in diamond was converted to match a tissue sample of the same size. A method based on a mathematical model of energy deposition distribution and the Fourier transform was proposed. The results indicate that the spectra converted from diamond to tissue align closely with those of the tissue. Nevertheless, the applicability of this method is constrained by the mathematical model of energy deposition distribution. Thus, developing a mathematical model that describes the energy deposition spectrum under various conditions can enhance the applicability of this conversion method.

During the normal operation of cooling towers in nuclear power plants, water vapor with a certain temperature is discharged through the outlet of the cooling tower, which mixes with the surrounding air. A portion of this vapor condenses and forms visible plumes. This paper uses Computational fluid dynamics (CFD) numerical simulation software to simulate six nuclear power units and their supporting cooling towers at a nuclear power plant site, and studies the impact of plumes emitted from the six cooling towers under different wind directions on the radioactive diffusion of the nuclear power plant discharge. The research results indicate that when the arrangement direction of the cooling towers is consistent with the wind direction,the influence of the cooling towers on the environmental flow field extends to a distance of approximately 1 500 m in the downwind direction. When the cooling towers are located in the downwind direction of the reactor unit, the emission from the cooling towers increases the pollution diffusion factor within a 1 000 m range of the chimney. As the environmental wind speed increases, the impact of the cooling tower emissions on the diffusion of pollutants from the chimney decreases.